About the delicate neutrality of investigations of UFO/UAP

The following point of view is related to many years of observation of individual expectations and approaches to unexplained phenomena, as well as to my personal experience.

We are obviously not equal when it comes to interpretations, this may seem obvious, but in our daily lives we too often forget that we are all driven by our own beliefs about the world and reality, and this will shape our positions and opinions all the more strongly. These beliefs can permeate any type of thought or idea, but even more so on subjects that at first glance have no obvious answer such as UAPs (Unexplained Aerospace Phenomena).

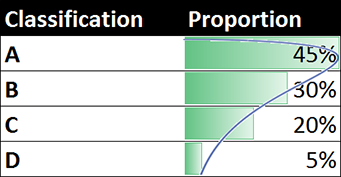

For example, if you present the same survey information to a group of (very serious) experts and ask them to vote on the classification they think is appropriate ("A/B/C/D"). And if the phenomenon is not obvious to recognize, you will usually get a wide range of responses. The distribution of their opinions will approximate an asymmetric normal distribution (sometimes centered on the right, left or middle, depending on the case):

This diversity of responses is always surprising to observe. Fortunately, this approach usually leads to a consensus and can be used to establish a classification in which one can have relative confidence. Indeed, when there is no defined expert to answer a phenomenology, this method allows to frame the reality by crossing the points of view and thus to find a way out. This is the principle of the "wisdom of crowds", and it is also the principle used by the justice system, which relies on an assembly of jurors to render a judgment that is as balanced as possible: in this case, the diversity of opinions and points of view of the people interviewed counts a lot.

Unfortunately, "those who are similar come together" and "in nature" (on the Internet...), ufologists (believers on the one hand, skeptics on the other hand) tend to group together by communities, which does not favor the objectivity of the judgments rendered.

To get to the heart of the matter, I will summarize the two opposing approaches to the UFO phenomena, which in my opinion are always based on values and in particular on beliefs. With the skeptical/rationalist ufologists on the one hand and the believing ufologists on the other. Knowing that they are not homogeneous groups, it is like in politics: there are extremists on both sides and in the middle, a majority of moderate people.

The tendencies that I note below are voluntarily taken from the extremes: they are intended to highlight the points discussed below.

Sceptic versus believer.png

"The scientific skeptic seeks to maintain a "delicate balance" between the tendency "to scrutinize in a relentlessly skeptical way all the hypotheses that are submitted to us", on the one hand, and "the one that invites us to keep an openness to new ideas", on the other. If you are only a skeptic, Sagan said, "no new ideas ever reach you; you never learn anything new; you become a hateful person convinced that foolishness rules the world - and, of course, many facts are there to prove you right. On the other hand, if you are open to gullibility and don't have an ounce of skepticism in you, then you can't even distinguish between useful and irrelevant ideas. If all ideas have the same validity, you are lost: for then no idea has any value." (Carl Sagan) Summary

Sommaire

The confirmation bias or expertise bias

Each expert, depending on his or her specialty, will tend to see and recognize the phenomena that he or she knows about in the facts. For a psychologist, a specialist in hallucinations, the NAPDs will first of all be hallucinations, for an astronomer the phenomena will first of all be caused by the moon or the stars, for a physicist specializing in plasmas first of all by ionization mechanisms, etc.

Confirmation bias" consists of giving preference to information that confirms one's preconceptions or hypotheses (without any priority consideration for the veracity of this information) and/or giving less weight to hypotheses that work against one's conceptions. As a result, people subject to this bias gather or recall information selectively and interpret it in a biased manner. It is also said that people "pull reality" to them.

Confirmation bias is implemented in different ways by the different communities, whether they are believers or skeptics. Hence the importance of confronting these communities and, more generally, the experts among themselves so that the approach implemented is truly holistic and as neutral as possible. Just as in the search for legal proof, it is not possible to work only for the prosecution or only for the defense: it is important that the approach be balanced, for the prosecution AND for the defense.

Information reduction and margin of error

Confirmation bias is expressed in different ways. In particular, by ignoring an important point that I would like to draw your attention to: the "margin of error".

It happens that the skeptic notices: "There was a star next to the location indicated by the witness!" and from there draws his conclusion: "It is a star!

So be it. But at what distance (in degrees) from the position of the phenomenon described by the witness was your star? In fine, what was the margin of error considered in this case and acceptable in general?

Asking this kind of question seems to me fundamental: if we omit it, we no longer have any limits. In this example, any star in the sky will do. The unrestrained and uncontrolled skeptical posture can be just as deleterious as the believing posture.

Yet, generally for the skeptic, the margin of error is ignored and is implicitly considered sufficient for the phenomenon described by the witness to match the "hypothesis": "it is generally in the same direction, so in the name of the principle of simplicity it can be nothing else". CQFD.

But nevertheless, can we admit everything? Can we admit, for example, a 30° margin of average error on the part of the witnesses, even if they used a fixed reference point in the environment? It is difficult to answer this kind of question objectively. Yet it is essential.

Others blithely take this step. Now, to admit that an error of 30° can be commonly made, even with a marker, is to admit that any bright point in the sky can finally be brought closer to a star. It is easy and it is within the reach of the first comer: anyone can take a software to find a star in a circle of a radius of 30 degrees corresponding more or less to a direction of observation whatever it is.

Another example: the skeptic will be able to affirm: "There was a plane in the field of vision!" and from there will draw his conclusion: "it is a plane!

So be it. But again with what margin of error?

This margin of error should be verified for each of the elements describing, on the one hand the phenomenon observed by the witness, on the other hand the proposed explanatory phenomenon, the hypothesis. Mainly: size, shape, color, elevation, azimuth, trajectory and speed. For example, with what difference in angular size? The skeptic will accept that he can reduce the angular size of the phenomenon, 10 times, 20 times, 30 times, or even more, until this reduction is compatible with the aircraft... Here again, what limits should be set? Has this been written or studied somewhere? Indeed, if we don't give ourselves limits in the reduction of information, we can do anything. The problem with airplanes (a bit like stars) is that they are everywhere and all the time. Within a radius of a few dozen kilometers around any person, every day you will find some. By extrapolating, it will always be possible to find a plane that passes in the field of observation:

Density aero france full.png

Aircraft overflight density in France: this graph shows a plot of aircraft trajectories made from transponder data (RadarVirtuel data, compiled by myself). In the center, in red Paris. What level of discrimination to choose when it comes to the presence of aircraft?

These two examples illustrate perfectly the "information reduction" which is based on the absence of precise measurements of the gap between the information produced by the witness and the proposed phenomenon. In other words, in the absence of a margin of error that we can afford to admit, we will not move forward. It is an essentially empirical subject where everyone can do what they want as long as there is no clear consensus on "what can be normal and what is not".

I would say that's fundamental. And yet not doing so suits everyone in terms of their assumptions: believers and skeptics alike.

Information reduction and "Top-Down" approach

This approach is favored by both believers and skeptics: this tendency to think that the witness's observation corresponds preferentially to a "known" phenomenon or respectively to an "unknown" phenomenon.

A skeptic will try to make a phenomenon fit with an explanatory hypothesis which generally derives from a domain that he masters well: his personal field of expertise. Let's say that the skeptic is an ornithologist, his preference will be for explanations based on birds, that he is interested in astronomical phenomena and his explanations will be based on the stars. And so on.

This "top-down" approach implies a "pre-design". An approach which, unfortunately, guides the research of course. In psychology, we call this a "confirmation bias". Once chosen, the skeptic finds it difficult to admit that his hypothesis "does not work", is not necessarily the reality described, since for him (he has a "nose" or "experience") it is "necessarily the phenomenon of which he has the idea and/or the habit". "It can only be that in this direction! This is accompanied by an underlying posture: maintaining credibility with others and justifying that his choice is the right one. Concerning the elements that do not fit with his hypothesis or that do not correspond to the aspects described by the witness (even very distant ones), rather than explaining them, he will dismiss them, omit them or try to find some way to get around them: finally, "a 30 degree error of the phenomenon in relation to the star? That's not a problem". And the rest? If it is necessary to explain it, without knowledge it could be a hallucination. If he can, he won't talk about it. And, he will concentrate on the descriptive aspects of the phenomenon that coincide: "It fits perfectly with the angular direction, if he had seen the phenomenon where he says, he would have necessarily described the plane (the moon, the star, etc.)". And in this case, the omission of information in the environment by the witness becomes a proof for the skeptic (sic!).

Use for confirmation or proof (!): the fact that the "witness did not see...", "did not describe..."!

In the case of experiences felt and lived as "extraordinary" by the witnesses, having had a strong emotional impact on them, the skeptics generally expect the witness to describe and to have taken note of elements integrated into the environment: in particular the presence of the moon, stars, planes, etc.

Unfortunately, there is an elementary principle of psychology that is totally ignored in this case by the "skeptics" or at least those who practice such "information reduction":

- That the witness is shocked and absorbed by the vision of the phenomenon to the point that his peripheral vision is reduced to the point that he focuses totally on his observation and forgets everything else. It is a banal phenomenon, which occurs daily, it happens for example when we drive. The more the phenomenon is involved, "the more the environment is erased" and the field of vision is focused on the phenomenon being followed.

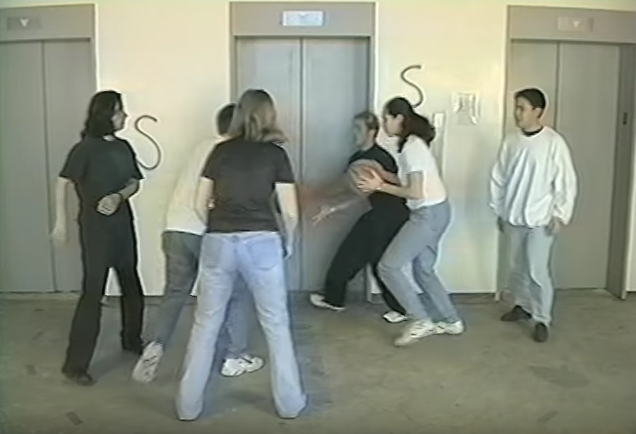

On this subject, you will probably have heard of the following two experiments: The first one is performed by the road safety authorities. About 10 out of 150 people pass this test.

Test 1. Psychology of perception: follow the coin and find out where it ends.

Test 2. Psychology of perception: Count the number of passes.

Results (in white):

In test 1, about 8-9% of people have seen the bell pepper.

In test 2, about 50% have seen the much larger gorilla.

This well-documented phenomenon is called "inattentional blindness".

Would it be "abnormal", in a context where a witness is obsessed by a phenomenon that fascinates him, not to pay attention or not to think of describing a star or the moon that would be a few degrees away or a phenomenon of an angular size 30 times smaller that would be near or even behind the phenomenon? Especially when the cognitive load is very high? (cf. "mental workload") This really raises questions.

To give you an idea, another close effect known in criminal psychology is called the "weapon focus" effect: A witness would tend to focus on the weapon being wielded by a perpetrator, thereby impairing his or her memory of other aspects of the crime scene, including the face of the offender. This effect of focusing on the weapon, noticed by investigators, has been confirmed by several experimental works and its explanation appears to be connected to inattentional blindness coupled with the emotional and mental load.

A question that hardly touches the skeptics: there is little or no trace of this topic of discussion in the forums. And yet, in view of the preceding elements, that a plane, a star, the moon, (etc.) can be close to a phenomenon described by a witness is not statistically insane; and that the witness does not describe the latter, because he is absorbed by his observation, is perhaps understandable. However, this is taken as an argument "for the prosecution": the witness did not see (the sun, the moon, the star, the plane, etc.) therefore it is not normal. From there the skeptic has a choice:

The witness is defective, he is not able to describe obvious elements of the scene, or worse, the witness is confused, in reality it is (the sun, the moon, the star, the plane, etc.) that he was looking at.

I rest my case (!)

Community attitude

Social recognition and conformation of individuals

Meanwhile, for the skeptic, the description of the witness must be "tortured" and "reduced" until it fits his idea of the witness. He is there to "sell" the phenomenon that he has selected and unconsciously not succeeding in doing so is a failure for him: he "MUST" provide an explanation with the elements that he has today, even if these elements are partial. It is also a form of recognition attitude, possibly exacerbated within a community: showing others that one is "good" because one "finds" an explanation. Otherwise, not finding the solution to a phenomenon is at best "not contributing anything to the community" and at worst being "bad" or showing incompetence (especially if it is targeted at outsiders!). This approach, which consists in starting from the phenomenon, if it is systematic and not confronted with other hypotheses, can be very biased and sets up a rather perverse mechanism based not on the facts, but on the "idea that one has of it". Let's not talk about the emotions that could be attached to this "What would others say? It should never be that because it implies that one has to explain a phenomenon at all costs, even if it doesn't work, which seems to me to be completely stupid. The "critical mind" then becomes a "mind that criticizes in order to criticize". By attitude and by game, it can push to the denigration, to the spirit of "clan" in its community. Aspects of the non-neutral attitude: "jabs" and slightly mocking or insolent criticism of "deviants" are common.

« UAP D »: Why classify « D » and what is the point?

However, when the investigation is well conducted, it should be as remarkable to show that no explanation can be found as one can be found. A "PAN D" could be the effect of a hallucination or a drone, but without clear arguments, wanting to impose an answer is counter-productive: it does not allow science to have a chance to explain the phenomenon objectively, to understand the mechanisms at work. E.g.: how could a hallucination be set up? To classify "D" is not, as many people think, to give credence to the craziest hypotheses or even to the extraterrestrial hypothesis. To classify "D" is to put a symbolic focus (although one should not in absolute terms stop at this A/B/C/D classification, but I will come back to this), on a phenomenon on which one must take the time to stop and observe it in perspective: it can in fact be explained later, with the progress of science or technical tools. The simple fact of focusing on it is interesting for scientists. What is the point of wanting to inflate a category of "C" cases? The phenomena in category "C" are a priori excluded from the stakes of science and understanding. They should not be because many "C" cases turn out to be very intriguing. Especially in the psychology of perception where we have much to learn. Fortunately, we have other tools that allow us to catch up with them, I talk about it in another article on classification here. In the meantime, what both skeptics and believers do is to put the margins of error "under the carpet". For them, there is no point in trying to calculate them or to put their finger on them, "it is obvious that the phenomenon is "that"! (a real UFO or a real known phenomenon)": poor science, this will not help it to advance.

Identifying the limits of reality

However, admitting that one does not know, identifying the limits of one's hypotheses while underlining the remarkable points of difference would seem to be the right approach. Rather than giving so much importance to one's image, to one's ego, to what people will say. Humility is essential in this approach. You know what that man named Pascal said? It should be as simple as that. Alas, it is not simple for the human brain which is a "classification machine". The "I don't know" is a failure. And the fact that no one talks about the limits or margins of error is convenient for everyone: skeptics and believers alike. Yet this is where the stumbling blocks lie. The devil is in the details". For the believer, it is simpler. To declare oneself de facto "skeptical" seems to me to be a non-neutral attitude in terms of the very approach that should be guided by the data: first a neutral bottom-up approach, then a confrontation with the top-down hypotheses, with measurement of the margins of error for each hypothesis.